Context:

- A controversial video circulating online shows actress Rashmika Mandanna entering an elevator, but it’s actually a ‘deep fake.’

- The original video features Zara Patel, a British Indian girl, with Mandanna’s face digitally inserted. This has sparked a major internet controversy.

Deepfake Technology – Increasing Threat of DeepFake Identities

- A recent survey on deep fake content reveals that adult content makes up 98 per cent of all deepfake videos online.

- 99 percent of realistic-looking pornography features female subjects.

- India ranks 6th among the nations most susceptible to deep fake adult content.

Enroll now for UPSC Online Course

What is Deepfake Technology?

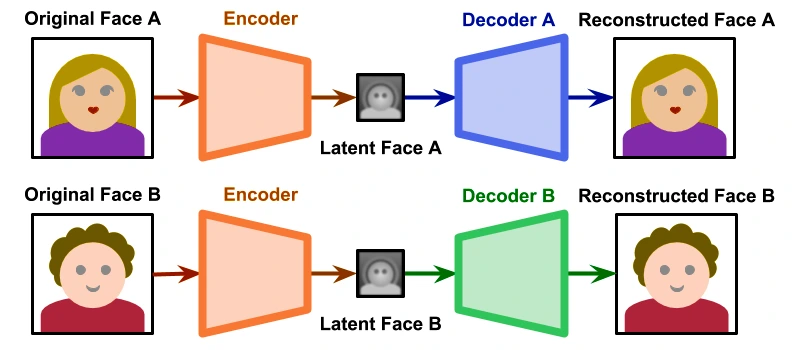

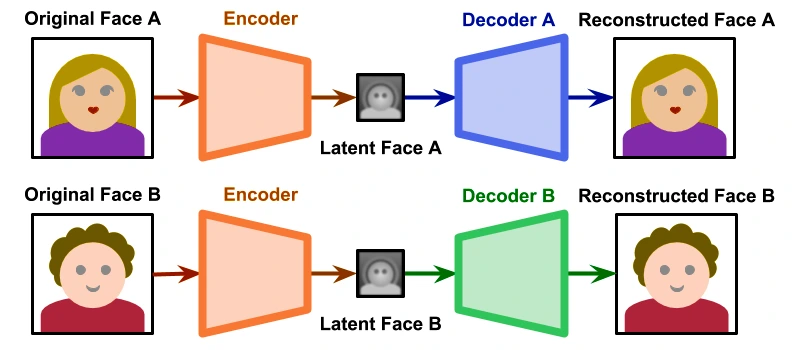

- Deep Fakes are a compilation of artificial images and audio put together with machine-learning algorithms to spread misinformation and replace a real person’s appearance, voice, or both with similar artificial likenesses or voices.

- Origin of the Term: The term deep fake originated in 2017 when an anonymous Reddit user called himself “Deepfakes.”

- This user manipulated Google’s open-source, deep-learning technology to create and post pornographic videos.

- The application of a technology called Generative Adversarial Networks (GAN), which uses two AI algorithms — where one generates the fake content and the other grades its efforts, teaching the system to be better — has helped come up with more accurate deepfakes.

- A Generative Adversarial Network (GAN) is a deep learning architecture that consists of two neural networks competing against each other in a zero-sum game framework.

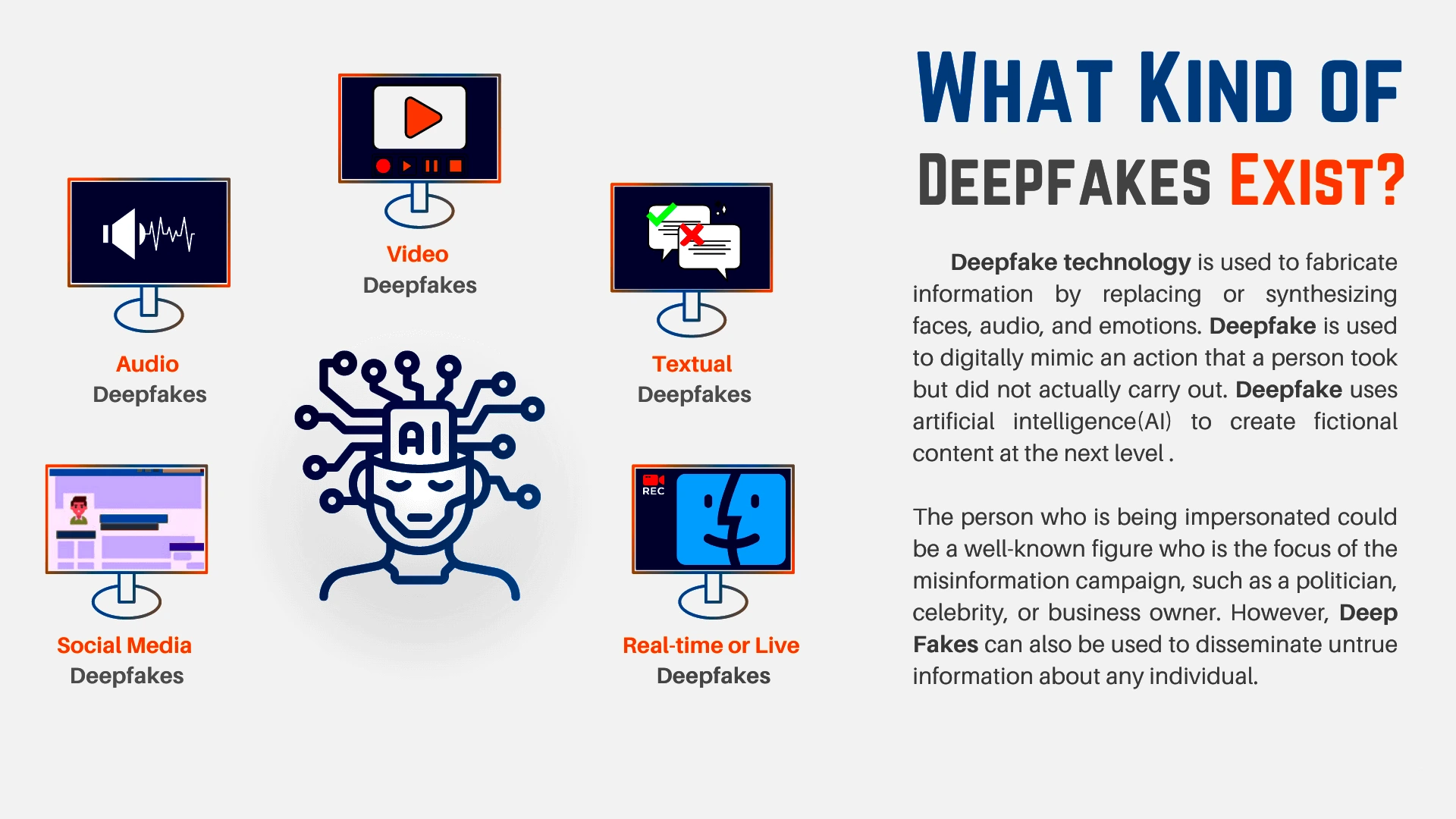

Types of Deepfake Technology

- Deepfake Videos and Images: Involves altering or fabricating content to display behavior or information different from the original source. Deepfake Audio: Poses a threat to voice-based authentication systems, especially for individuals with widely available voice samples like celebrities and politicians.

- Textual Deepfakes: Refers to written content that appears to be authored by a real person.

- Notable Impersonations: Deepfake technology has been used to impersonate prominent figures, including former U.S. Presidents Barack Obama and Donald Trump, India’s Prime Minister Narendra Modi, Facebook CEO Mark Zuckerberg, and Hollywood actor Tom Cruise, among others.

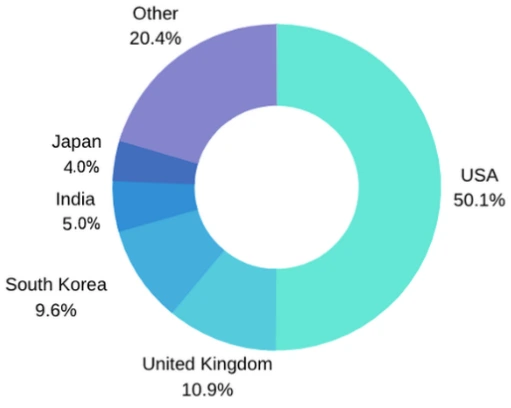

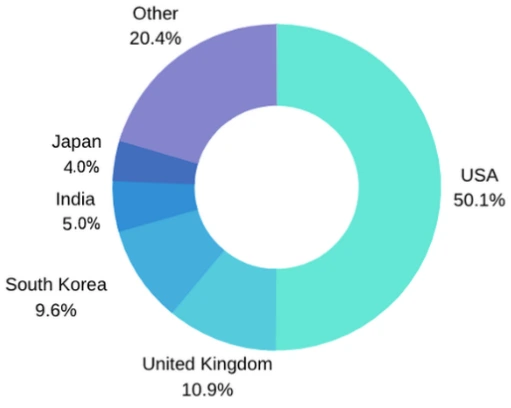

- Prevalence of Deepfake (Refer Image):

Spotting Deepfakes

- Unnatural Eye Movements: Look for irregular eye movements, as genuine videos have smoother eye coordination with speech and actions.

- Mismatches in Color and Lighting: Check for inconsistencies in lighting on the subject’s face and surroundings.

- Compare Audio Quality: Deepfake audio may have imperfections, so compare it with the video’s visual content.

- Strange Body Shape or Movement: Watch for unnatural body proportions or movements, especially during physical activities.

- Artificial Facial Movements: Identify exaggerated or unsynchronized facial expressions that don’t match the video’s context.

- Unnatural Positioning of Facial Features: Look for distortions or misalignments in facial features.

- Awkward Posture or Physique: Pay attention to awkward body positions, proportions, or movements that seem implausible.

|

What are the impacts of Deepfake Technology?

- Victims of Deep Fake Pornography: The primary victims of malicious deepfake technology are women, with over 96% of deepfakes being pornographic videos. This type of content threatens, intimidates, and psychologically harms individuals.

- As of September 2019, 96 per cent of deepfake videos online were pornographic, primarily categorised as “revenge porn”, according to the report of a company called Sensity.

- Character Assassination: Deepfakes can portray individuals engaging in antisocial behaviors and saying false things they never did. Even if victims can prove their innocence, the damage is often already done.

- In 2018, A video of former US president Barack Obama was posted on the internet verbally abusing Donald Trump.

- Erosion of Trust in Media: Deepfake technology contributes to a decline in trust in traditional media. This erosion can lead to a culture of factual relativism, damaging civil society.

- For example: In 2022, a Ukrainian television news outlet, Ukraine 24, claimed that its live broadcast and website were hacked, and during the hack, a chyron falsely stating that Ukraine surrendered was displayed.

- Additionally, a deep fake video of Ukrainian President Volodymyr Zelensky appeared to be circulating online, in which he seemingly urged Ukrainians to surrender

- National Security Threat: Malicious nation-states can use deepfakes to undermine public safety, create chaos, and sow uncertainty in target countries. This technology can also undermine trust in institutions and diplomacy.

- On May 22, a deepfake image purporting to show a towering column of dark smoke rising from the Pentagon received sober coverage from a few Indian television news channels.

- Non-State Actors: Insurgent groups and terrorist organizations can use deepfakes to manipulate and spread inflammatory speeches or provocative actions to incite anti-state sentiments among the public.

- For instance, a terrorist organisation can easily create a deepfake video showing western soldiers dishonouring a religious place to flame existing anti-West emotions and cause further.

- Liar’s Dividend: The existence of deepfakes can lead to the dismissal of genuine information as fake news. Leaders may use deepfakes and alternative facts to discredit actual media and truths.

- Scams and Hoaxes: These fakes can be used to impersonate individuals for fraudulent activities.

- According to one of Speechify’s blog posts, back in 2020, a manager from a bank in the U.A.E., received a phone call from someone he believed was a company director. The manager recognised the voice and authorised a transfer of $35 million.

Enroll now for UPSC Online Classes

What is India’s Stand on Dealing with Deepfake Technology?

- In India, however, there are no legal rules against using deepfake technology.

- However, specific laws can be addressed for misusing the tech, which includes Copyright Violation, Defamation, and cyber felonies.

Also Read: IT Rules under scrutiny: Due to Deepfake Threats

Laws against Deepfake Technology in India

- IT Act of 2000 – Section 66E: This section is applicable in cases of deepfake crimes that involve capturing, publishing, or transmitting a person’s images in mass media, violating their privacy.

- Offenders can face imprisonment for up to three years or a fine of up to ₹2 lakh.

- IT Act of 2000 – Section 66D: This section allows for the prosecution of individuals who use communication devices or computer resources with malicious intent to cheat or impersonate someone.

- It can result in imprisonment for up to three years and/or a fine of up to ₹1 lakh.

- Copyright Protection: The Indian Copyright Act of 1957 provides copyright protection for works, including films, music, and other creative content.

- Copyright owners can take legal action against individuals who create deepfakes using copyrighted works without permission.

- Section 51 of the Copyright Act provides penalties for copyright infringement.

- Government Advisory: On January 9, 2023, the Ministry of Information and Broadcasting issued an advisory to media organizations to exercise caution when airing content that could be manipulated or tampered with.

- The Ministry also recommended labeling manipulated content as “manipulated” or “modified” to inform viewers that the content has been altered.

Global Initiatives to Combat Deepfake Technology

- China: China has implemented a policy that requires deepfake content to be labeled and traceable to its source. Users need consent to edit someone’s image or voice, and news from deepfake technology must come from government-approved outlets.

- European Union (EU): The EU has updated its Code of Practice to combat the spread of disinformation through deepfakes.

- Tech giants like Google, Meta (formerly Facebook), and Twitter are required to take measures to counter deepfakes and fake accounts on their platforms.

- Non-compliance can result in fines of up to 6% of their annual global turnover.

- The Code of Practice, introduced in 2018, aims to bring together industry players to combat disinformation.

- United States: The U.S. introduced the bipartisan Deepfake Task Force Act to assist the Department of Homeland Security (DHS) to counter deepfake technology.

- The U.S. established a Deepfake Task Force Act to counter deepfake technology, requiring annual studies and countermeasure development.

Solutions to Combat Deepfake Technology

- Enhanced Media Literacy: Media literacy efforts must be enhanced to cultivate a discerning public. Media literacy for consumers is the most effective tool to combat disinformation and deepfakes.

- Regulations: Implement meaningful regulations through collaborative discussions involving the technology industry, civil society, and policymakers.

- These regulations should disincentivize the creation and distribution of malicious deepfakes.

- Social Media Platform Policies: Encourage social media platforms to take action against deepfakes. Many platforms have already established policies or acceptable terms of use for deepfakes.

- These platforms should act to add dissemination controls or differential promotional tactics like limited sharing or downranking to stop the spread of deepfakes on their networks.

- Example: Labelling content is another effective tool, which should be deployed objectively and transparently, without any political bias or business model considerations.

- Technology Solutions: Develop accessible and user-friendly technology solutions to detect deepfakes, authenticate media, and promote authoritative sources.

- Individual Responsibility: Every individual should take responsibility for being critical consumers of online media. Before sharing content on social media, pause and think about its authenticity. Contributing to a solution to combat the “infodemic” by practicing responsible online behavior is essential.

- Establish a Research and Development Wing: India can consider establishing a dedicated research and development entity similar to DARPA, which has been at the forefront of deepfake detection technologies.

- The Defense Advanced Research Projects Agency (DARPA), a pioneer research and development wing of the US Department of Defense (DoD), invested heavily in detection technologies through two overlapping programmes: Media Forensics (MediFor), which ended in 2021, and Semantic Forensics (SemaFor).

- These programmes aimed to develop advanced technologies for detecting deepfake media, including images and videos.

Enroll now for UPSC Online Course

Conclusion:

The rise of deepfake technology poses significant threats to individuals, societies, and national security, prompting a need for global collaboration, regulatory measures, enhanced media literacy, and technological solutions to mitigate its harmful impacts and ensure the responsible use of artificial intelligence in the digital age.

![]() 7 Nov 2023

7 Nov 2023