Context:

AI models are resource-intensive regarding energy consumption, data requirements, and high computational costs and contribute to carbon emissions.

AI Carbon Footprint: Key Highlights

- Source of emission: The emissions come from the infrastructure associated with AI, such as building and running the data centres that handle the large amounts of information required to sustain these systems.

- For Example: Training GPT-3 (the precursor AI system to the current ChatGPT) generated 502 metric tonnes of carbon, equivalent to driving 112 petrol-powered cars for a year.

- Technological approach to reduce emission: Spiking neural networks (SNNs) and lifelong learning (L2), have the potential to lower AI’s ever-increasing carbon footprint, with SNNs acting as an energy-efficient alternative to Artificial neural networks (ANN).

What is Artificial Intelligence?

- Artificial intelligence (AI) refers to the simulation of human intelligence in machines programmed to think like humans and mimic their actions.

The Lifetime of an AI System:

It can be split into two phases: Training and Inference.

- Training: During this period a relevant dataset is used to build and tune improve the system.

- For example: Training of an AI that’s to be used in self-driving cars would require a dataset of many different driving scenarios and decisions taken by human drivers.

- Inference: The trained system generates predictions on previously built datasets.

- For Example: AI system will predict effective manoeuvres for a self-driving car.

How Does Artificial Intelligence Generate Carbon Footprints?

- Data Processing and Training: This data processing requires significant computational power and consumes a lot of energy, contributing to AI’s carbon footprint.

- For Example, Open Al’s GPT-3 and Meta’s OPT algorithms were estimated to emit more than 500 and 75 metric tons of carbon dioxide, respectively, during training

- Carbon Footprint of Data Centers: The entire data center infrastructure and data submission networks account for 2–4% of global CO2 emissions.

- For Example: In a 2019 study, researchers from the University of Massachusetts, Amherst, found that training a standard large AI model can emit up to 284,000 kilograms (626,000 pounds) of carbon dioxide equivalent.

Artificial Neural Networks (ANNs)

They work by processing and learning patterns from data, enabling them to make predictions.

- High Precision Demands: ANNs perform numerous multiplications with decimal numbers during training and inference. Performing these calculations with high precision requires significant computing power, memory, and time.

- Energy-Memory Trade-off: Computer hardware often has a trade-off between processing speed and memory usage. For high-precision decimal calculations, more memory is needed, further increasing energy consumption.

- Growing Complexity, Growing Hunger: As ANNs become more complex, the number of calculations increases exponentially. This translates to a sharp rise in energy demands.

How the Human Brain Process Information:

- Neurons in the human brain communicate with each other by transmitting intermittent electrical signals called spikes.

- The spikes themselves do not contain information. Instead, the information lies in the timing of these spikes. This binary, all-or-none characteristic of spikes (usually represented as 0 or 1) implies that neurons are active when they spike and inactive otherwise.

- This is one of the reasons for energy-efficient processing in the brain.

- Morse Code: Morse code is an old system of encoding messages that is used to send telegraphic information using signals and rhythm.

|

Spiking Neural Networks (SNNs):

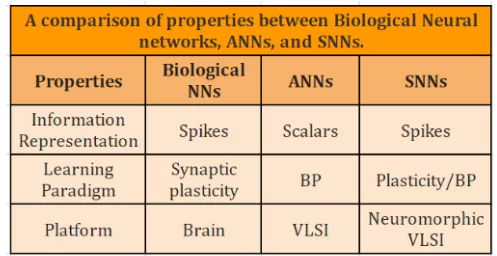

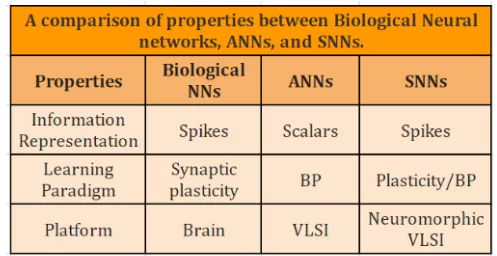

- Mimics Huaman Brain: Both Artificial Neural Networks (ANNs) and SNNs draw inspiration from the human brain’s structure, which contains billions of neurons (nerve cells) connected via synapses.

- Spiking Communication: Unlike ANNs with constantly active neurons, SNNs mimic the brain’s communication method using timed electrical spikes.

- Just as Morse code uses specific sequences of dots and dashes to convey messages, SNNs use patterns or timings of spikes to process and transmit information.

- Energy Efficiency: This spiking approach makes SNNs highly energy-efficient. They only consume power when a spike occurs, leading to up to 280 times lower energy use than ANNs.

- Applications: Due to their low energy needs, SNNs are ideal for scenarios with limited power sources, such as space exploration, defence systems, self-driving cars, etc.

Lifelong Learning(L2)

- The Challenge of Sequential Learning: Training ANNs on new data sequences can lead to forgetting previously learned information.

- Retraining and Emissions: This necessitates retraining from scratch for changes in the operating environment, increasing the overall energy footprint of AI.

- L2 to the Rescue: Lifelong Learning algorithms enable sequential training on multiple tasks with minimal forgetting.

- L2 enables models to learn throughout their lifetime by building on their existing knowledge without retraining them from scratch.

The Future of Energy-Efficient AI

- Smaller Models: Research is ongoing to develop smaller AI models with the same capabilities as larger ones, further reducing energy demands.

- Quantum Computing’s Potential: Advancements in quantum computing offer a completely different approach to computing, potentially enabling faster and more energy-efficient training and inference for both ANNs and SNNs.

- Proactive Solutions: The rapid growth of AI necessitates proactive measures to develop energy-efficient solutions before its carbon footprint becomes significant.

Also Read: Global Partnership On Artificial Intelligence – GPAI

News Source: The Hindu

![]() 8 Mar 2024

8 Mar 2024