Context

The M4 chip introduced by Apple in the iPad Pro includes a 16-core Neural Engine, referred to by Apple as the Neural Processing Unit (NPU).

About Neural Processing Unit (NPU)

- A neural processing unit, is a dedicated processor designed specifically for accelerating neural network processes.

- Neural Network: It is a type of machine learning algorithm that mimics the human brain for processing data.

- Capability to Handle AI-Related Tasks: Thus, the NPU can handle machine learning operations that form the basis for AI-related tasks, such as speech recognition, natural language processing, photo or video editing processes like object detection, and more.

- NPU Integration in Consumer Devices and Data Centers: In most consumer-facing gadgets such as smartphones, laptops and tablets, the NPU is integrated within the main processor, adopting a System-on-Chip (SoC) configuration.

- However, for data centres, the NPU might be an entirely discrete processor, separate from any other processing unit such as the central processing unit (CPU) or the Graphics processing unit (GPU).

Enroll now for UPSC Online Course

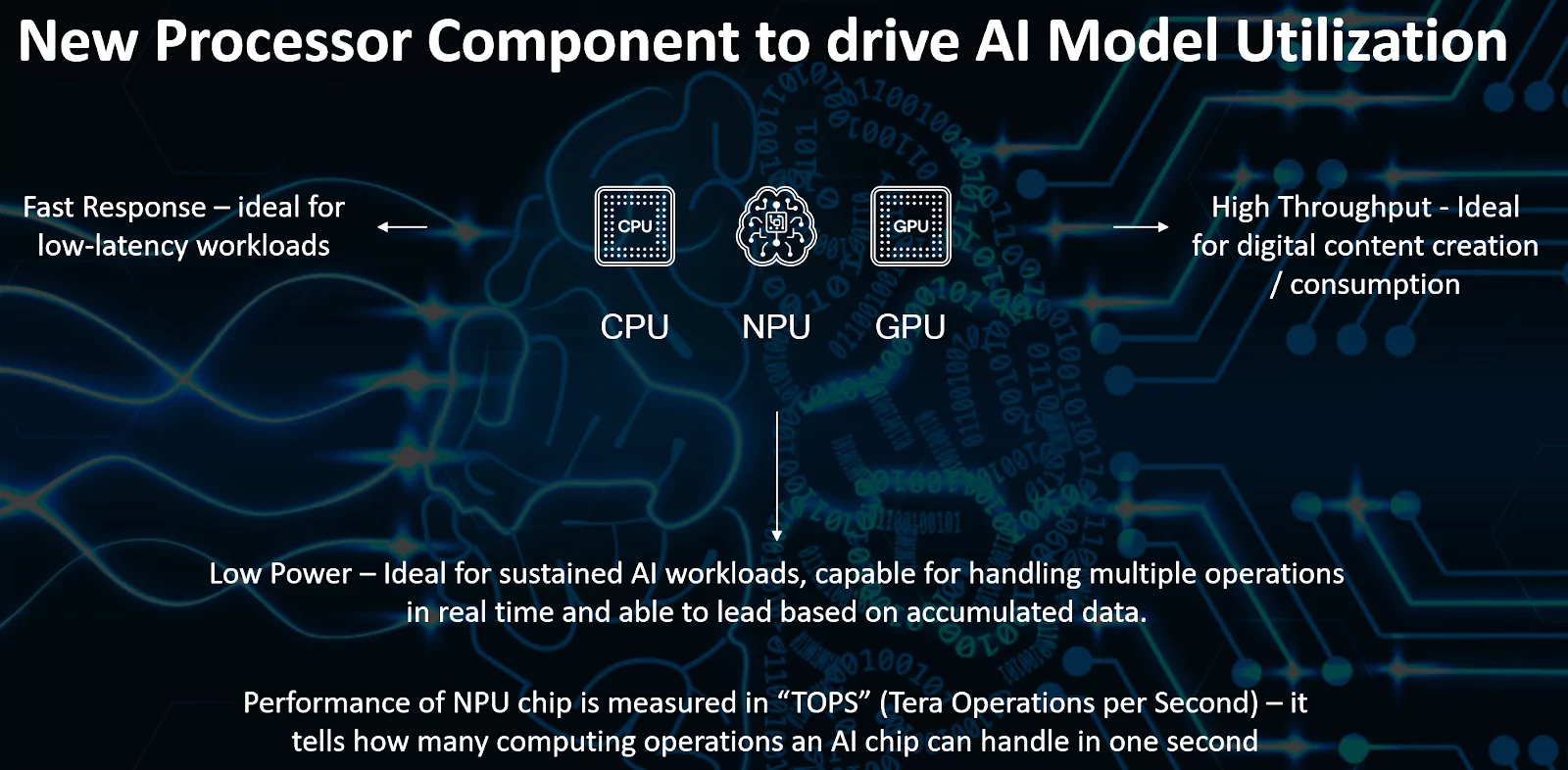

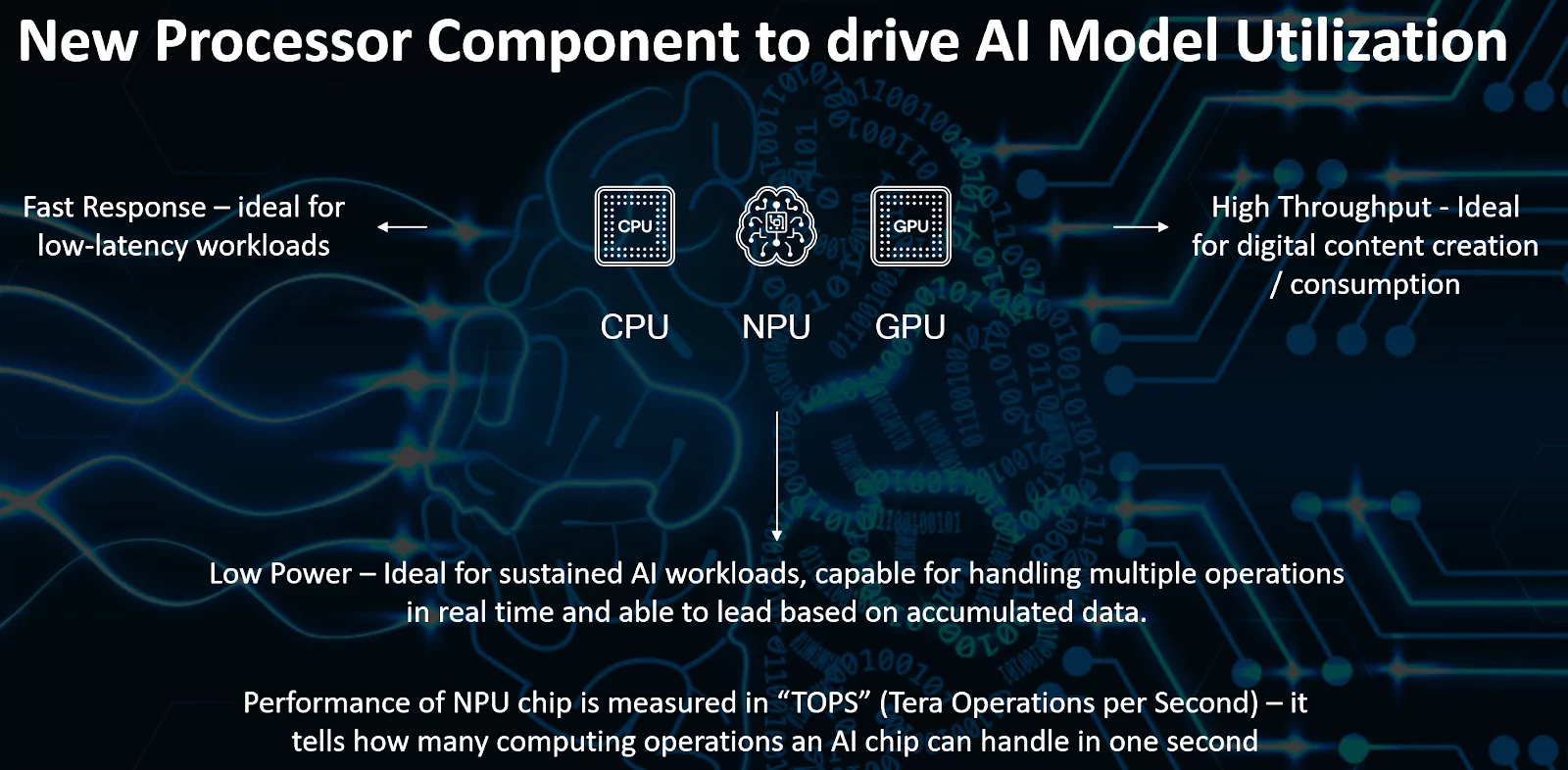

How is Neural Processing Unitdifferent from CPU and GPU?

- CPUs: They employ a sequential computing method, issuing one instruction at a time, with subsequent instructions awaiting the completion of their predecessors.

- In contrast, NPU harnesses parallel computing to simultaneously execute numerous calculations. This parallel computing approach results in swifter and more efficient processing.

- Thus, CPUs are good at sequential computing, executing one process at a time, but running AI tasks requires the processor to execute multiple calculations and processes simultaneously.

Graphic Processing Units(GPUs): These processors possess parallel computing capabilities and incorporate integrated circuits designed to execute AI workloads alongside other tasks like graphic rendering and resolution upscaling.

Graphic Processing Units(GPUs): These processors possess parallel computing capabilities and incorporate integrated circuits designed to execute AI workloads alongside other tasks like graphic rendering and resolution upscaling. -

- NPUs replicate these circuits solely to handle machine learning operations. This dedicated functionality leads to more efficient AI workload processing with reduced power consumption.

- GPUs are still used in the initial development and refinement of AI algorithms, while NPUs execute those refined language models on the consumer’s device at a later stage.

Neural Processing Unit and on-device AI

- Transition to Smaller Language Models: Large language models (LLMs) are often too large to be run on-device, leading service providers to offload processing to the cloud to deliver AI features based on their language models.

- However, major technology companies are now introducing smaller language models like Google’s Gemma, Microsoft’s Phi-3, and Apple’s OpenELM.

- Emergence of On-Device AI Models: This shift towards scaled-down AI models capable of running entirely on-device is gaining traction.

- As on-device AI models become increasingly prominent, the role of NPUs becomes crucial, as they are responsible for deploying AI-powered applications directly on the hardware.

| Small Language Models (SLMs): SLMs are more streamlined versions of large language models.

When compared to LLMs, smaller AI models are cost-effective to develop and operate, and

- They perform better on smaller devices like laptops and smartphones.

- SLMs are great for resource-constrained environments including on-device and offline inference scenarios.

|

Also Read: India AI Mission

![]() 12 May 2024

12 May 2024

Graphic Processing Units(GPUs): These processors possess parallel computing capabilities and incorporate integrated circuits designed to execute AI workloads alongside other tasks like graphic rendering and resolution upscaling.

Graphic Processing Units(GPUs): These processors possess parallel computing capabilities and incorporate integrated circuits designed to execute AI workloads alongside other tasks like graphic rendering and resolution upscaling.